Skills

- Mapping of algorithms to SoC platforms

- Embedded realtime systems

- Hardware acceleration

- Performance optimisation

- Embedded Linux

- Realtime GPU rendering with KMS/DRM

- Automotive Ethernet

- gPTP time synchronisation

- Implementation of embedded prototypes and demonstrators

- Measurement and evaluation

- Timing analysis

- Power analysis

Open Source Projects

A IEEE-1588 PTP library for the Teensy 4.1

A linux kernel module to provide realtime clock offset

Tiny Tapeout 2 - German Traffic Light State Machine

Tiny Tapeout is an educational project that makes it easy to get digital designs manufactured on a real chip. Project 12 is a state machine that generates signals for vehicle and pedestrian traffic lights at an intersection of a main street and a side street. A blinking yellow light for the side street is generated in the reset state.

Adventskranz

Pixelblaze Sunrise

Publications

Zeitdeterministische Systemarchitekturen für hochauflösende Matrix-Scheinwerfer

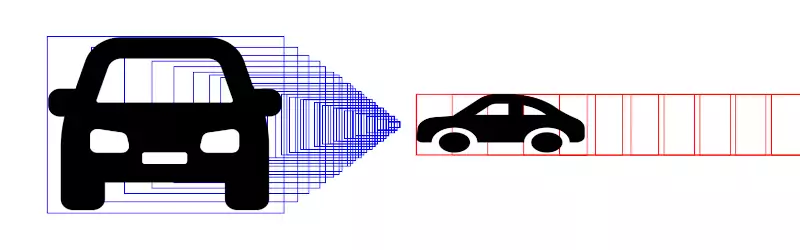

High-resolution matrix headlights are an advanced driver assistance system that provides the best possible illumination of the surrounding area for safe and comfortable nighttime driving. In addition to glare-free high beams and adaptive light distributions, they display safety-relevant information directly on the road. The implementation of these applications requires system architectures that enable precise temporal and spatial control of the light distribution so that it is projected at the right time and place on the road. The accuracy and efficiency of light control in conventional system architectures is limited by high latencies, limited transmission bandwidths, and inaccurate mapping models. In this thesis, time-deterministic system architectures are designed based on a modeling of the system behavior to solve the described problems. The focus is on precise ego-motion compensation of the vehicle and dynamic masking of road users to avoid glare. The relevant system parameters, including resolution, refresh rate, and latency, are identified based on analytical models of the dynamic projection geometry and used to evaluate system architectures. Techniques such as asynchronous reprojection, which can significantly reduce the data rate by transmitting displacement information instead of full light distributions, play a key role in the optimization process, reducing system latency while improving the efficiency of lighting control. Three different architectural concepts were designed and evaluated in the study: centralized, decentralized, and hybrid systems. Centralized architectures are characterized by central processing of the light distribution on powerful hardware, such as GPUs. This allows for efficient computation and easy maintenance, but places high demands on the transmission rate. Decentralized architectures, on the other hand, shift processing to the headlight modules themselves, reducing latency but increasing hardware requirements. Hybrid architectures combine the best of both approaches, augmenting centralized computation with decentralized functions to enable latency-critical applications such as ego-motion compensation. The developed architectures were experimentally evaluated on prototype systems. The results show that hybrid approaches in particular allow a significant reduction of latency and thus an improvement of the stability of the light projections. The transfer rate is minimized by using asynchronous reprojection without compromising the precision of the projections.

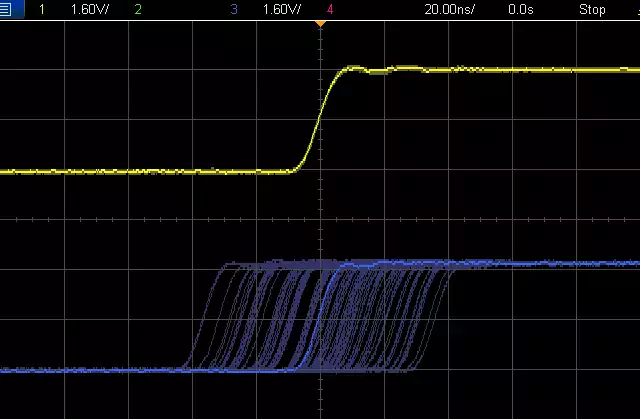

PTP-Synchronized Tri-Level Sync Generation for Networked Multi-Sensor Systems

Synchronization of sensor devices is crucial for concurrent data acquisition. Numerous protocols have emerged for this task, and for some multi-sensor setups to operate synchronized, a conversion between deployed protocols is needed. This paper presents a bare-metal implementation of a Tri- Level Sync signal generator on a microcontroller unit (MCU) synchronized to a master clock via the IEEE 1588 Precision Time Protocol (PTP). Cameras can be synchronized by locking their frame generators to the Tri-Level Sync signal. As this synchronization depends on a stable analog signal, a careful design of the signal generation based on a PTP-managed clock is required. The limited tolerance of a camera to clock frequency adjustments for continuous operations imposes rate-limits on the PTP-controller. Simulations using a software model demonstrate the resulting controller instabilities from rate-limiting. This problem is addressed by introducing a linear prediction mode to the controller, which estimates the realizable offset change during rate-limited frequency alignment. By adjusting the frequency in a timely manner, a large overshoot of the controller can be avoided. Additionally, a cascading controller design that decouples the PTP from the clock update rate proved to be advantageous to increase the camera’s tolerable frequency change. This paper demonstrates that a MCU is a viable platform to perform PTP-synchronized Tri-Level Sync generation. Our open source implementation is available for use by the research community.

Ethernet-based lighting-architecture: Image stabilization for high-resolution light functions

Single pair Ethernet in combination with Ethernet endpoints provides a scalable basis for the direct control of sensors and actuators in zonal vehicle networks. As recently shown, this approach is also ideal for driving high-resolution light functions. The ability to transmit different parallel data streams to actuators opens a wide field for new applications. Here, we show a method for stabilising high-resolution light projections in driving operation. The stabilization of the light image is based on an inertial measurement unit that records vehicle movements in real-time. An algorithm in a central control unit continuously calculates correction values for the position and distortion compensation of the light distribution and sends this data to the lamp via Ethernet, preferably 10BASE-T1S. Two methods are combined in a proof of concept: predictive correction with video data rate and image shifting in the headlamp’s frame buffer at high frequency.

Sub-Microsecond Time Synchronization for Network-Connected Microcontrollers

This paper presents a bare-metal implementation of the IEEE 1588 Precision Time Protocol (PTP) for network-connected microcontroller edge devices, enabling sub-microsecond time synchronization in automotive networks and multimedia applications. The implementation leverages the hardware timestamping capabilities of the microcontroller (MCU) to implement a two-stage Phase-locked loop (PLL) for offset and drift correction of the hardware clock. Using the MCU platform as a PTP master enables the distribution of a sub-microsecond accurate Global Positioning System (GPS) timing signal over a network. The performance of the system is evaluated using master-slave configurations where the platform is synchronized with a GPS, an embedded platform, and a microcontroller master. Results show that MCU platforms can be synchronized to an external GPS reference over a network with a standard deviation of 40.7 nanoseconds, enabling precise time synchronization for bare-metal microcontroller systems in various applications.

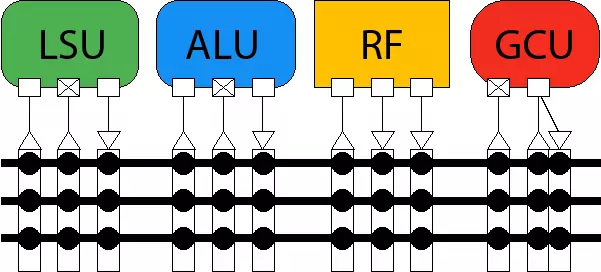

Synthetic Aperture Radar Algorithms on Transport Triggered Architecture Processors using OpenCL

Dynamic Model-Based Safety Margins for High-Density Matrix Headlight Systems

Real-time masking of vehicles in a dynamic road environment is a demanding task for adaptive driving beam systems of modern headlights. Next-generation high-density matrix headlights enable precise, high-resolution projections, while advanced driver assistance systems enable detection and tracking of objects with high update rates and low-latency estimation of the pose of the ego-vehicle. Accurate motion tracking and precise coverage of the masked vehicles are necessary to avoid glare while maintaining a high light throughput for good visibility. Safety margins are added around the mask to mitigate glare and flicker caused by the update rate and latency of the system. We provide a model to estimate the effects of spatial and temporal sampling on the safety margins for high- and low-density headlight resolutions and different update rates. The vertical motion of the ego-vehicle is simulated based on a dynamic model of a vehicle suspension system to model the impact of the motion-to-photon latency on the mask. Using our model, we evaluate the light throughput of an actual matrix headlight for the relevant corner cases of dynamic masking scenarios depending on pixel density, update rate, and system latency. We apply the masks provided by our model to a high beam light distribution to calculate the loss of luminous flux and compare the results to a light throughput approximation technique from the literature.

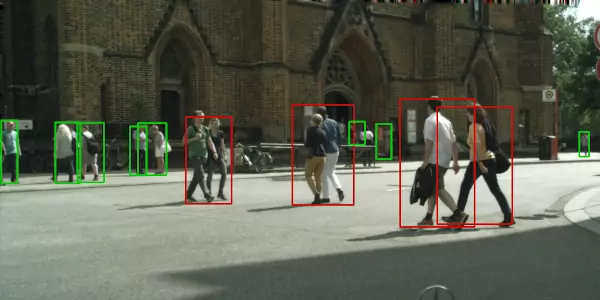

Deep Learning Based Classification of Pedestrian Vulnerability Trained on Synthetic Datasets

The reliable detection of vulnerable road users and the assessment of the actual vulnerability is an important task for the collision warning algorithms of driver assistance systems. Current systems make assumptions about the road geometry which can lead to misclassification. We propose a deep learning-based approach to reliably detect pedestrians and classify their vulnerability based on the traffic area they are walking in. Since there are no pre-labeled datasets available for this task, we developed a method to train a network first on custom synthetic data and then use the network to augment a customer-provided training dataset for a neural network working on real world images. The evaluation shows that our network is able to accurately classify the vulnerability of pedestrians in complex real world scenarios without making assumptions on road geometry.

Probabilistic 3D Point Cloud Fusion on Graphics Processors for Automotive (Poster)

Design and Evaluation of a TTA-based ASIP for the Extraction of SIFT-Features

Design and Implementation of Digital Sensor Interfaces for a High Temperature ASIC Demonstration Platform

Awards

Best Session Presentation Award

Experience

Research Engineer

Low-latency realtime video signal processing algorithms on embedded hardware platforms for high resolution LED-Matrix headlight systems. Our adaptive headlights prevent glare for drivers and pedestrians while increasing visibility and safety for nighttime driving.

Research Exchange

Development of an Application Specific Instructionset Processor (ASIP) for computer vision applications like SIFT Feature extraction. We chose a wide horizontal datalevel parallelisation strategy with 1024 bits to enable pipelined processing of HD-images at high framerates.

Internship

Design Space exploration for FPGA-based hardware accelerators for object detection algorithms based on neural networks. Embedded Linux drivers for efficient DMA datatransfers over AXI4 on an FPGA-based SoC.

Education

Leibniz University Hannover

Leibniz University Hannover

Leibniz University Hannover

Links

Institute of Microelectronic Systems: ims.uni-hannover.de

Google Scholar: scholar.google.com

ORCiD: orcid.org

Xing: xing.com

GitHub: github.com